This article will summarize some of the work done in the process of introducing gRPC in the backend, mainly based on Go to demonstrate the practice of gRPC.

Compatible with HTTP/JSON interface¶

Since we are migrating on an existing system, two points must be considered:

- How does the existing service call the gRPC interface?

- How to call HTTP interface in new services?

When we have already used ProtoBuf to define the mapping of each RPC and HTTP for the service, the ideal situation is to use ProtoBuf as the Single source of truth, directly generating gRPC client + HTTP client, and it would be best if they could use the same abstract type, shielding the underlying transport protocol.

Envoy or gRPC-Gateway?¶

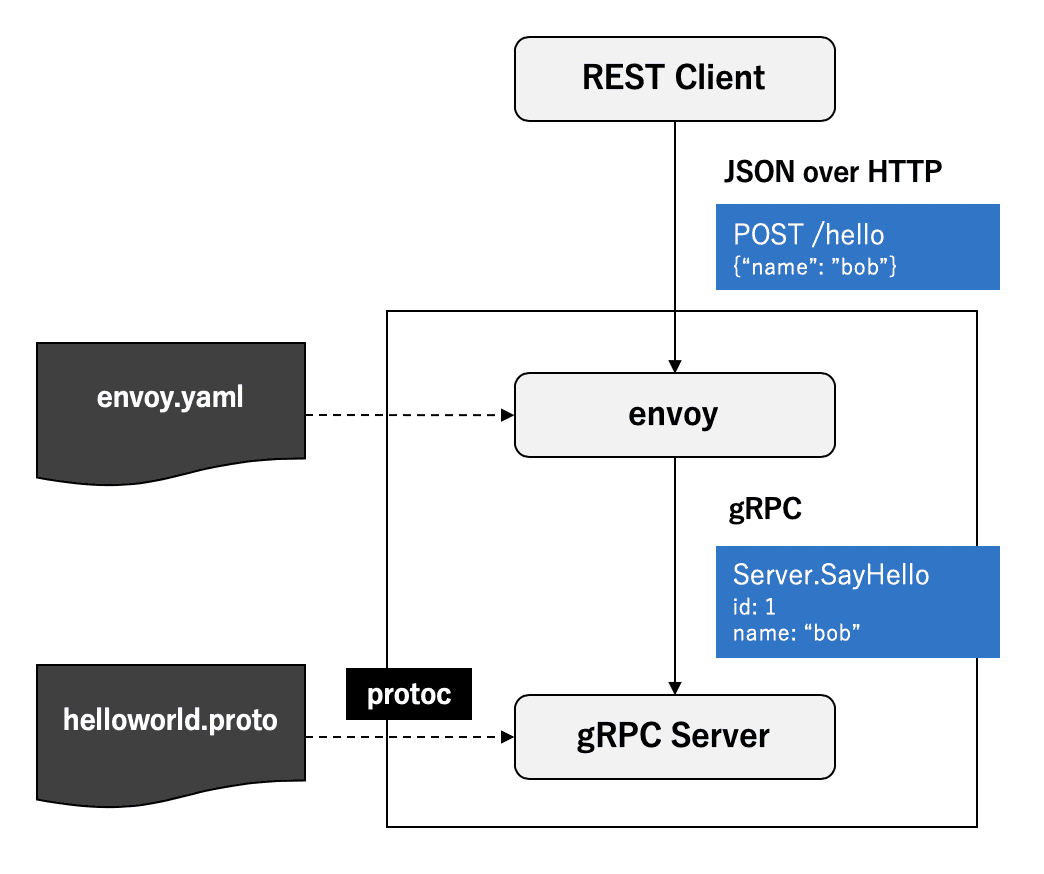

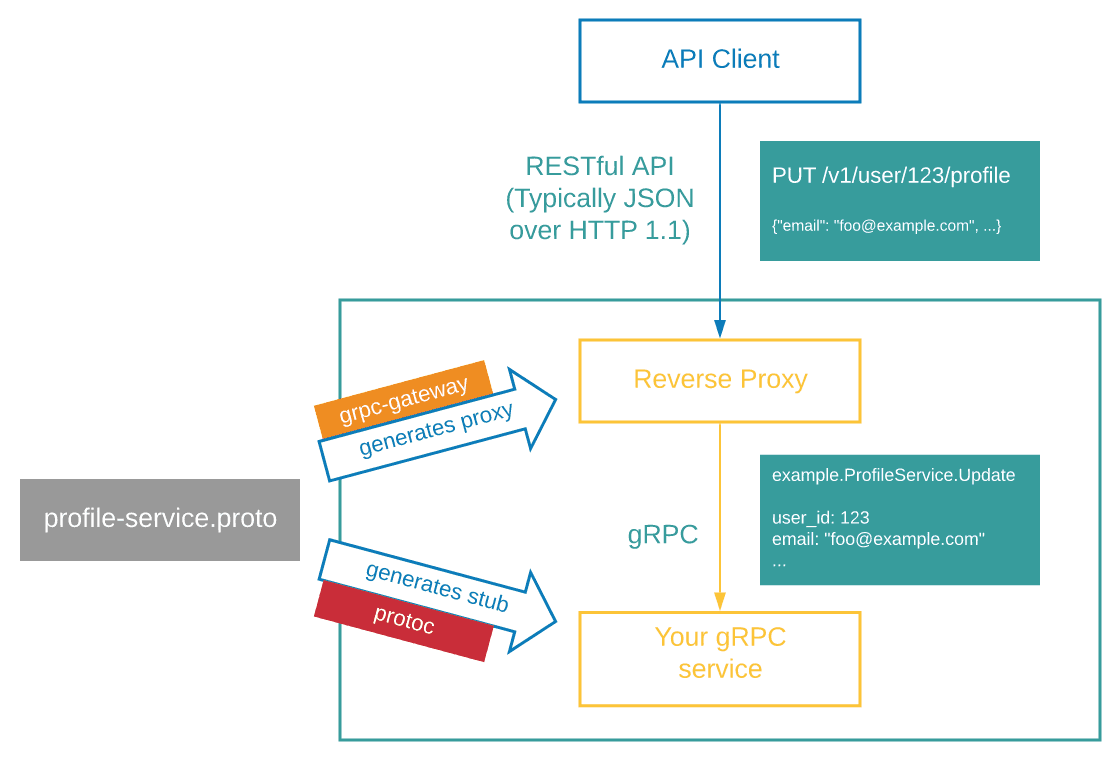

In order to make gRPC compatible with the existing HTTP interface, there are already quite a few solutions in the industry: https://github.com/envoyproxy/envoy, https://github.com/grpc-ecosystem/grpc-gateway... However, they are generally implemented by converting protocols through a front-end gateway.

Envoy requires the separate launch of an envoy sidecar gateway process within the container, which implements protocol conversion through its gRPC-JSON transcoder. This is an ideal and beautiful solution: sinking the protocol conversion work to the infrastructure, compatible with all languages, and allowing the service itself to focus on the implementation of gRPC, ensuring code simplicity.

The gRPC-Gateway solution generates an HTTP service based on ProtoBuf definitions, which accepts HTTP requests and in turn calls the gRPC service. It can be embedded in existing service code and started along with the service.

Compared to gRPC-Gateway, the envoy solution requires the service to introduce an additional external dependency. As of now, during the initial migration process, choosing gRPC-Gateway allows control of all logic within a single process, which is convenient for debugging and more straightforward and pragmatic.

Define ProtoBuf RPC Interface Mapping¶

gRPC itself has already provided a set of JSON type conversion protocols, on the other hand, Google API also offers a standard for mapping HTTP interfaces to gRPC interfaces. gRPC-Gateway is implemented based on this standard for protocol conversion. It is very expressive, almost completely meeting all the needs of conventional HTTP interfaces:

- Support mapping query/path parameter/body parameters to Request Message

- Support all HTTP Methods

- Support multiple HTTP interfaces mapping to the same RPC

service UserService {

rpc GetUser(GetUserRequest) returns (GetUserResponse) {

option(google.api.http) = {

get: "/internals/users/{id}"

additional_bindings: {

post: "/internals/user/getById"

body: "*"

}

}

};

}For more information, you can refer to the commentary document of Google HttpRule and the gRPC documentation, which provide very detailed explanations of the protocol and usage.

We only need to generate the corresponding RPC mapping logic code through protoc-gen-grpc-gateway according to the ProtoBuf definition.

Mounting gRPC-Gateway¶

gRPC-Gateway offers a variety of mounting methods:

- RegisterHandlerServer: Binds a Server implementation, which is converted by gRPC-Gateway from HTTP requests to directly call the corresponding Server, does not support stream requests.

- RegisterHandlerFromEndpoint: Bind a target address, which is connected by gRPC-Gateway to implement reverse proxy.

- RegisterHandler: Binds a gRPC Server Connection, reverse proxied by gRPC-Gateway.

- RegisterHandlerClient: Bind a gRPC Client, which is converted by gRPC-Gateway from HTTP requests into calls to this Client.

Currently, gRPC-Gateway is more recommended to use in the reverse proxy mode, which can maximize the capabilities of gRPC to the greatest extent.

So, we can start a gRPC Server and an HTTP Server separately within a single process, allowing them to listen on two different ports. The HTTP Server intercepts requests according to the rules and sends them to the gRPC Server via the gRPC-Gateway:

// HTTP

gw := runtime.NewServeMux() // 新建 grpc gateway ServerMux

service.RegisterHandlerFromEndpoint(context.Background(), gw, ":9090", []grpc.DialOption{grpc.WithInsecure()}) // 注册目标 gRPC 服务

e := echo.New()

e.Use(func(next echo.HandlerFunc) echo.HandlerFunc {

return func(c echo.Context) error {

// 使用 echo 完成 HTTP 路由

// 在最外层直接拦截符合规则的请求,避免经过任何 HTTP Middleware

if strings.HasPrefix(c.Path(), "/internals/") {

gw.ServeHTTP(c.Response(), c.Request())

return nil

}

return next(c)

}

})

e.Use(MiddlewareA)

e.Use(MiddlewareB)

e.GET("/v1/user", handler)

// ...

go e.Start(":3000")

// gRPC

l, _ := net.Listen("tcp", ":9090")

srv := grpc.NewServer()

// ...

go srv.Serve(l)Listening on the same port?¶

There is another popular method in the community, which is to use https://github.com/soheilhy/cmux to distinguish the target address based on the HTTP type + Content-Type. If the request is HTTP/2 and the Content-Type is application/grpc, the request is directly forwarded to the gRPC Server. This solution is equivalent to adding another gateway within the service process, which allows the gRPC and HTTP services to listen on the same port, reducing the cognitive cost for users to a certain extent (you don't need to worry about anything, just connect to this port).

However, in practice, we found that although this solution seems ideal, the actual benefits it brings are very limited. On the contrary, there are some disadvantages:

- When the process exits, it is necessary to correctly coordinate the exit order of cmux, grpc server, and http server, otherwise it will cause a deadlock.

- Unable to directly route based on HTTP Path, it still needs to be further routed through another HTTP Router.

Provide interfaces for the frontend¶

Furthermore, in addition to providing internal calls, we can also use gRPC to provide interfaces for the frontend. As of now, most of our interfaces can be directly defined using gRPC, and the gateway can convert client HTTP requests into gRPC calls. The benefits of this are quite obvious:

- Strongly Typed Input/Output

- Generate OpenAPI documentation directly through ProtoBuf

- The service process only needs to focus on the gRPC service, which is much simpler.

- Convert the protocol through the gateway without affecting the client.

However, some special interfaces still need to be handled separately, such as those rendering images, web pages, and other interfaces that do not output JSON.

Some pitfalls¶

When mapping HTTP interfaces to gRPC, some troubles may arise in the conversion of data structures due to previous poor practices:

- Polymorphic Types

Many existing interfaces use polymorphic type, which looks like:

{ "type": "POST" | "USER" | "COMMENT", "data": Post | User | Comment }Such type cannot be defined using ProtoBuf, it's not a universal design. A better design should be:

{ "type": "POST" | "USER" | "COMMENT", "post": Post, "user": User, "comment": Comment, }However, if you try to refactor such interfaces using gRPC, in order to ensure compatibility, you can only abandon the strong type constraint on the return value, use google.protobuf.Value to define the corresponding data field, and mark this field as deprecated.

- Null Value Handling

Since ProtoBuf will remove null values during serialization, such as empty Maps and Arrays, the corresponding transcoded JSON naturally will not have the corresponding fields (undefined). When the upstream service gets the data, direct read and write operations to the corresponding fields may cause null pointers. Therefore, accessing RPC return values needs to be null-safe, rather than wishfully thinking that a certain field will never be null.

However, when grpc-gateway serializes JSON, it allows for custom codec. By default, it uses protojson and enables EmitUnpopulated, which means it retains all fields with zero values instead of using undefined.

defaultMarshaler = &HTTPBodyMarshaler{ Marshaler: &JSONPb{ MarshalOptions: protojson.MarshalOptions{ EmitUnpopulated: true, }, UnmarshalOptions: protojson.UnmarshalOptions{ DiscardUnknown: true, }, }, }In the vast majority of cases, apart from making each JSON Response redundant with a large amount of data, there is not much of a problem, which avoids triggering null pointers by direct upstream access. However, it is still necessary to pay attention to whether the upstream needs to explicitly distinguish between zero values and null states when calling. If the upstream needs to execute corresponding logic based on field == null, we need to introduce various boxed types from google.protobuf to represent the additional null state.

- Type Conversion

Many services do not clearly distinguish between data models (PO) and data transfer models (DTO) - the program will directly deserialize the database objects into objects within the process, and finally expose them directly to the outside.

response.Write(GetDataFromDB()) // 一个极端的例子In the code generated by gRPC now, DTOs are explicitly defined separately, requiring explicit type conversion in the code.

If languages like Go, Java are used, their type systems do not support structural subtyping, which also means that a considerable amount of code needs to be written to implement type conversion.

Of course, using a language with a more flexible type system like TypeScript can handle this issue, but this doesn't mean it's a good idea to pass the database model directly to the outside without conversion, as this would greatly increase the coupling between systems.

HTTP/JSON Client Transformation¶

In a previous article, we mentioned that when we first introduced Go, we implemented a basic HTTP Client generator based on ProtoBuf. Due to the powerful expressiveness of ProtoBuf and the good design of the code generator, we used ProtoBuf to define a large number of internal interfaces, and even many external service interfaces. By generating an HTTP Client, it provided us with a general and simple RPC Client, greatly improving our research and development efficiency.

Now, as this HTTP Client has been widely used, in the process of gradually migrating to gRPC, we hope to minimize incompatibility as much as possible, and keep the behavior of the new and old versions consistent.

The design of this Client interface took into account compatibility with gRPC from the very beginning. Now, we only need to make minor adjustments to smoothly replace the RPC Client of each service:

// 原来版本

type UserServiceClient interface {

GetUser(ctx context.Context, req *GetUserRequest, ...option.Option) (*GetUserResponse, error)

}

// grpc 生成的版本

type UserServiceClient interface {

GetUser(ctx context.Context, req *GetUserRequest, ...grpc.CallOption) (*GetUserResponse, error)

}The above option.Option is our custom call option, while grpc.CallOption is the call option required by gRPC. The two are currently incompatible in terms of type. We only need to modify option.Option to implement two sets of interfaces, and it can smoothly be compatible with the old and new versions.

userservice.GetUser(ctx, &userservice.GetUserRequest{}, option.WithRetry(2))Client-side Fault Tolerance¶

gRPC is a pure RPC tool, which does not have built-in downgrade and circuit breaker solutions. The solution given by Google is to sink the traffic control service governance capability to the infrastructure through Service Mesh. The envoy sidecar in the container supports circuit breaking, and can make the gRPC client throw errors directly through error injection. Although in this way, the called service can be protected when an exception occurs, we should still implement corresponding fault tolerance processing within the calling process. We should not let errors spread, causing an avalanche of the entire service link.

Therefore, when calling RPC, we still need to perform corresponding degradation handling for errors:

// 原始方式

res, err := userservice.GetUser(ctx, &userService.GetUserRequest{})

if err!=nil {

if s,ok:=status.FromError(err);ok {

if grpcErr.Code() == codes.Unavailable {

// 降级

res = &userservice.GetUserResponse{}

} else {

return err

}

} else {

return err

}

}The above shows the way to handle gRPC errors in Go, which is very primitive. It's hard to imagine that so much error handling logic needs to be written for each RPC call. We need to find a more elegant solution.

We can handle exceptions by mounting a circuit breaker + downgrade intercepter for the entire Client. If a circuit breaker occurs and the CallOption contains a specific DegradeOption, then override the result of this call:

// interceptor

var breaker = gobreaker.NewCircuitBreaker(

gobreaker.Settings{

...

IsSuccessful: func (err error) bool {

// 判断错误是否被计入熔断

if s, ok := status.FromError(err); ok {

switch s.Code() {

// 忽略特定 error code

case codes.InvalidArgument, codes.NotFound, codes.Canceled:

return true

}

}

return err == nil

},

},

)

// intercepte is the grpc interceptor func

func intercepte(

ctx context.Context,

method string,

req,reply interface{},

cc *grpc.ClientConn,

invoker grpc.UnaryInvoker,

opts ...grpc.CallOption,

) error {

// 获取一个熔断器执行任务

err := breaker.Execute(func() (interface{},error) {

return nil,invoker(ctx,method,req,reply,cc,opts...)

})

if errors.Is(err,gobreaker.ErrOpenState) ||

errors.Is(err,gobreaker.ErrTooManyRequests) {

// 如果发生熔断则降级

if d,ok := extractDegrade(ctx,opts);ok {

if err := copier.Copy(reply,d); err != nil {

panic("cannot copy to dst")

}

return nil

}

return status.Error(codes.Unavailable,err.Error())

}

return err

}

// 从 option 中导出降级选项

func extractDegrade(ctx context.Context, opts []grpc.CallOption) (interface{}, bool) {

for _, o := range opts {

if d, ok := o.(degrader); ok {

return d.degrade(ctx), true

}

}

return nil, false

}With the above interceptor, we can gracefully implement circuit breaking and degradation when calling RPC:

// 新的方式

res, err := userservice.GetUser(ctx, &userservice.GetUserRequest{}, WithDegrade(&userservice.GetUserResponse{}))Service Discovery and Load Balancing¶

Our services directly utilize the service discovery capability provided by K8S. We only need to declare the target K8S Service that different gRPC Services point to, and we can directly access the corresponding services. To reduce maintenance costs, we have defined a default K8S service name for each Service on ProtoBuf by customizing Service Option (associating the gRPC service with the K8S service):

service UserService {

option (google.api.default_host) = "user-service"

rpc GetUser(GetUserRequest) returns (GetUserResponse)

}In this way, we can directly connect to the corresponding services in the generated code:

func DialUserService(ctx context.Context, opts ...grpc.DialOption) (grpc.ClientConnInterface, error) {

return grpc.DialContext(ctx, "user-service:9090", opts...)

}Since envoy natively supports gRPC, there is no need for the service process to implement load balancing itself. It only needs to connect to the corresponding Service, and envoy can evenly distribute gRPC traffic downstream.

Health Check¶

K8S does not natively support the gRPC protocol probe, but we can use the standard gRPC Health Checking Protocol to implement health checks.

In gRPC-go, grpc_health_v1 has already been integrated. All you need to do is to register a Health Server for the gRPC server:

import (

grpc "google.golang.org/grpc"

grpcHealth "google.golang.org/grpc/health"

grpc_health_v1 "google.golang.org/grpc/health/grpc_health_v1"

)

grpcServ := grpc.NewServer()

grpc_health_v1.RegisterHealthServer(grpcServ, grpcHealth.NewServer())Additionally, we need to declare the corresponding gRPC health check command in the relevant K8S deployment. We can directly use the community-provided https://github.com/grpc-ecosystem/grpc-health-probe, which is a client-side implementation of grpc_health_v1.

# 通过 Dockerfile 在容器内预装 grpc_health_probe

RUN GRPC_HEALTH_PROBE_VERSION=v0.3.1 && \

wget -qO/bin/grpc_health_probe https://github.com/grpc-ecosystem/grpc-health-probe/releases/download/${GRPC_HEALTH_PROBE_VERSION}/grpc_health_probe-linux-amd64 && \

chmod +x /bin/grpc_health_probespec:

containers:

- name: grpc

...

readinessProbe:

exec:

command: ["/bin/grpc_health_probe", "-addr=:9090"]

initialDelaySeconds: 5

livenessProbe:

exec:

command: ["/bin/grpc_health_probe", "-addr=:9090"]

initialDelaySeconds: 10

...Others¶

Managing ProtoBuf Dependencies¶

In large monorepos, all dependencies exist as local files, making mutual references very convenient. However, introducing third-party ProtoBuf in other projects is very inconvenient. We often need to store third-party dependencies directly in a third_party directory for direct dependency.

project

├── third_party

│ └── google

│ └── api

│ └── annotation.proto

└── proto

└── service

└── user

└── v1

└── api.protoWhen using google.api.annotation, the author also encountered such a problem. The above method is obviously not an elegant solution, which not only causes redundancy, but also makes future updates quite troublesome. Fortunately, the ProtoBuf build tool https://github.com/bufbuild/buf has officially released The Buf Schema Registry, a central repository service for ProtoBuf. We can directly use Buf to manage external dependencies of ProtoBuf:

# buf.yaml

version: v1

deps:

- buf.build/googleapis/googleapisWith the above declaration, you can directly import "google/api/annotations.proto"; during the build.

Node.js + TypeScript¶

Currently, we have a large number of Node.js+TypeScript services. Supporting gRPC can not only improve development efficiency, but also eliminate the need for server-side code to be compatible with HTTP (just use the gRPC client directly). At present, the gRPC official provides @grpc/grpc-js for use in Node. Unlike other languages that provide code generator solutions, it requires users to dynamically import proto definitions at runtime and then make them callable. This means that there are no type constraints at compile time, so we lose the benefits of using ProtoBuf Schema or TypeScript.

To solve this problem, there are numerous solutions in the community. After comparison, the author chose https://github.com/stephenh/ts-proto: Instead of using the JavaScript + d.ts approach, it directly generates pure TypeScript Server and Client. The internal implementation also directly calls grpc-js, which is a good encapsulation of the official SDK.

It also works well with Buf:

# node.gen.yaml

version: v1

plugins:

- name: ts_proto

path: ./node_modules/.bin/protoc-gen-ts_proto

out: .

opt:

- forceLong=string

- esModuleInterop=false

- context=true

- env=node

- lowerCaseServiceMethods=true

- stringEnums=true

- unrecognizedEnum=false

- useDate=true

- useOptionals=true

- outputServices=grpc-js

strategy: allHowever, compared to grpc-go, grpc-js's grpc client interceptor introduces more aspects, bringing more cognitive thresholds to developers. For details, you can refer to this design of node grpc client interceptor:

Additionally, since gRPC itself supports stream mode, it is not possible to simply implement the code using async/await. For the sake of overall interface uniformity, the rpc methods generated by grpc-js are all of the Callback type:

interface ServiceClient {

call(

input: Request,

callback: (err: grpc.ServiceError | null, value?: Response) => void,

): grpc.ClientUnaryCall

}We implemented our own client code generator, which completes the encapsulation to support the async/await mode during code generation, resulting in a better user experience:

const client = UserServiceClient.createDefaultInstance()

const user = await client.getUser(

{

username: "guoguo",

},

{

deadline: 1000,

interceptors: [

interceptors.withRetry(2),

interceptors.withDegrade({} as GetUserResponse),

],

}

);Summary¶

Introducing gRPC has improved the performance of service-to-service calls to a certain extent, and more importantly, through a unified RPC definition + code generation approach, it has brought more reliable communication types to the service, improving development efficiency. However, there is no silver bullet. On the other hand, gRPC has introduced more complexity to the entire system. We have to face the coexistence of two RPC systems, HTTP/JSON and gRPC, within the system; at the same time, debugging gRPC compared to HTTP can also be somewhat troublesome.

The smooth use of gRPC across different languages cannot be separated from a large number of open-source tools and best practices provided by the community. In addition to the ones mentioned above, more resources can be found in the https://github.com/grpc-ecosystem/awesome-grpc repository.