Vercel's cloud functions are a layer of encapsulation over AWS lambda, providing a very simple access method, almost completely free, which is very friendly to individual developers. In addition to Node.js, Vercel also provides cloud function support for Go, Python, and Ruby. Recently, I tried to migrate some of my personal Go service backends, originally deployed on K8S, to Vercel. This article will introduce some of the pitfalls I encountered during this process.

Writing code¶

Basic Usage¶

The definition of Vercel cloud functions is very simple, using the code file path as the url path of the cloud function, you just need to put the function file in the corresponding directory. For Go, it means defining a Go source file, such as api/user/api.go is used to handle all requests with the path /api/user, and you need to expose a function of http.Handler in it. Because the function name is not limited to specific names, you can even use uppercase Russian letters as function names to avoid being called by your own code.

package api

func Ф(w http.ResponseWriter, r *http.Request) {

// do something

}Custom Routing¶

As mentioned above, Vercel uses code paths to distinguish cloud functions. This pattern is different from the conventional route registration method. Each time an interface is added, a directory needs to be created, which cannot be centrally managed, is inflexible, and difficult to maintain.

What if we let all requests enter this one function? We can override the original routing mechanism based on the routing mechanism provided by Vercel. All we need to do is create a vercel.json file in the root directory of the service and configure it:

{

"routes": [

{

"src": "/.*",

"dest": "/api/index.go"

}

]

}In this way, requests of any path will directly call api/index.go. When we no longer rely on Vercel's routing, we need to take responsibility for the routing of requests ourselves. As shown below, we define an http.Handler using echo, and use this Handler to directly handle all requests, thereby bridging the cloud function invocation with the traditional development mode.

import (

"net/http"

"github.com/labstack/echo/v4"

)

var srv http.Handler

func init() {

ctl := NewController()

e := echo.New()

e.GET("/books",ctl.ListBooks)

e.POST("/books",ctl.AddBooks)

srv = e

}

func Ф(w http.ResponseWriter,r *http.Request) {

srv.ServeHTTP(w,r)

}

However, on the flip side, having a single route handle all requests poses a significant problem. No matter how peripheral the interface call is, we need to initialize the entire system, which inherently conflicts with the Serverless model. On the other hand, the overall executable file size of the final package will also be relatively large. Therefore, a better approach is to practice DDD (Domain-Driven Design), exposing cloud functions separately for different domains. Different domains can access each other via RPC (Remote Procedure Call). In this way, each cloud function will only contain and initialize code related to its own domain.

You can refer to Vercel's documentation on function cold starts:

Monorepo¶

When writing microservices, there are often common codes (such as RPC definitions). Splitting them into independent external packages can reduce development efficiency. At this time, we can use the monorepo mechanism to write multiple services in the same repository and directly call shared codes. You can refer to my previous article: Golang Practice in Jike Backend

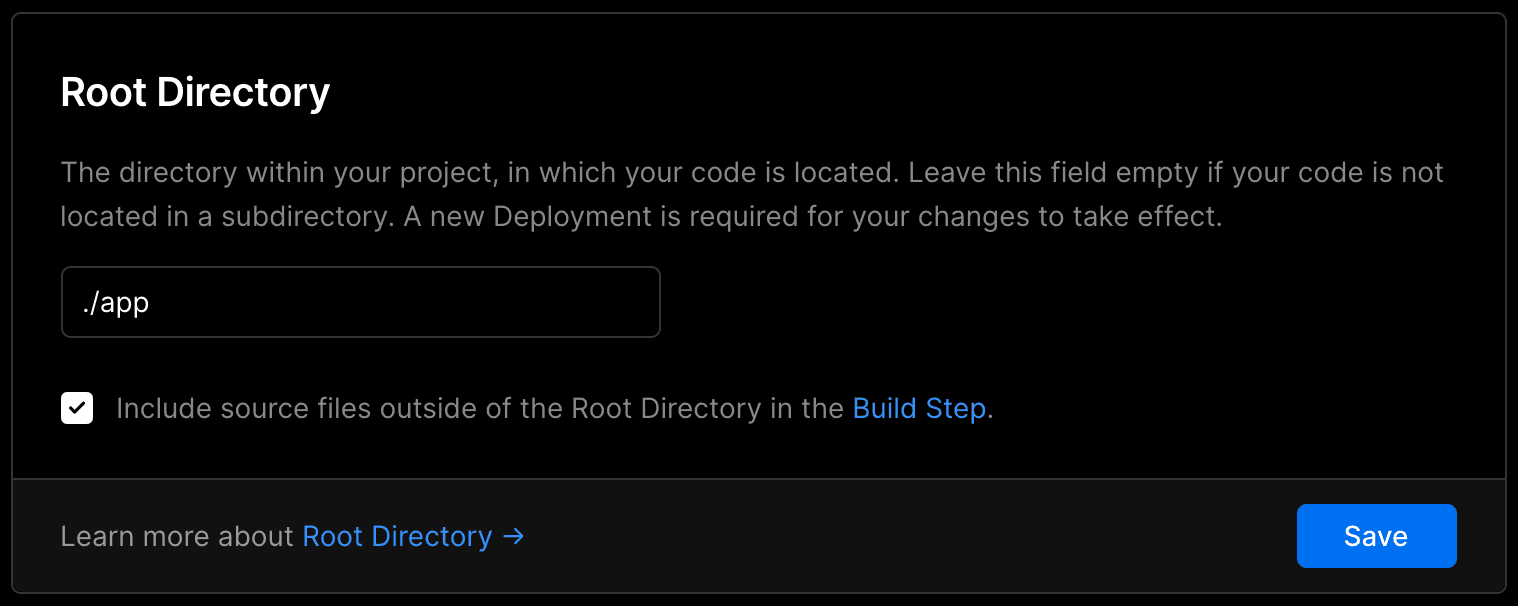

Vercel also supports monorepo, which allows different subdirectories to be configured as the project root. However, unlike the conventional Golang monorepo, Vercel requires the project root to contain a go.mod file, rather than using the shared go.mod in the parent directory. As such, we need to establish independent go.mod files for each directory:

.

├── pkg: 共享代码

│ ├── util

│ ├── ...

│ └── go.mod

├── app

│ ├── app1

│ │ ├── api

│ │ └── go.mod

│ ├── app2

│ │ ├── api

│ │ └── go.mod

│ └── ...

└── ...And in the service's go.mod, import the shared code using the replace method:

module github.com/sorcererxw/demo/app/app1

go 1.16

require (

github.com/sorcererxw/demo/pkg v1.0.0

)

replace github.com/sorcererxw/demo/pkg => ../../pkg

At the same time, you need to check the Include source files outside of the Root Directory in the Build Step for the project to ensure that the build environment can access shared files in the parent directory.

Infrastructure¶

Unlike AWS Lambda, which provides ready-made infrastructure, a lot of the infrastructure on Vercel requires developers to figure out on their own.

RPC¶

Considering that native gRPC is not very friendly to HTTP, and Vercel itself can only expose interfaces through http.Handler, I chose twirp, an open-source RPC component from Twitch. Compared to gRPC, twirp natively supports HTTP mux and JSON serialization, making it more suitable for scenarios like Vercel cloud functions.

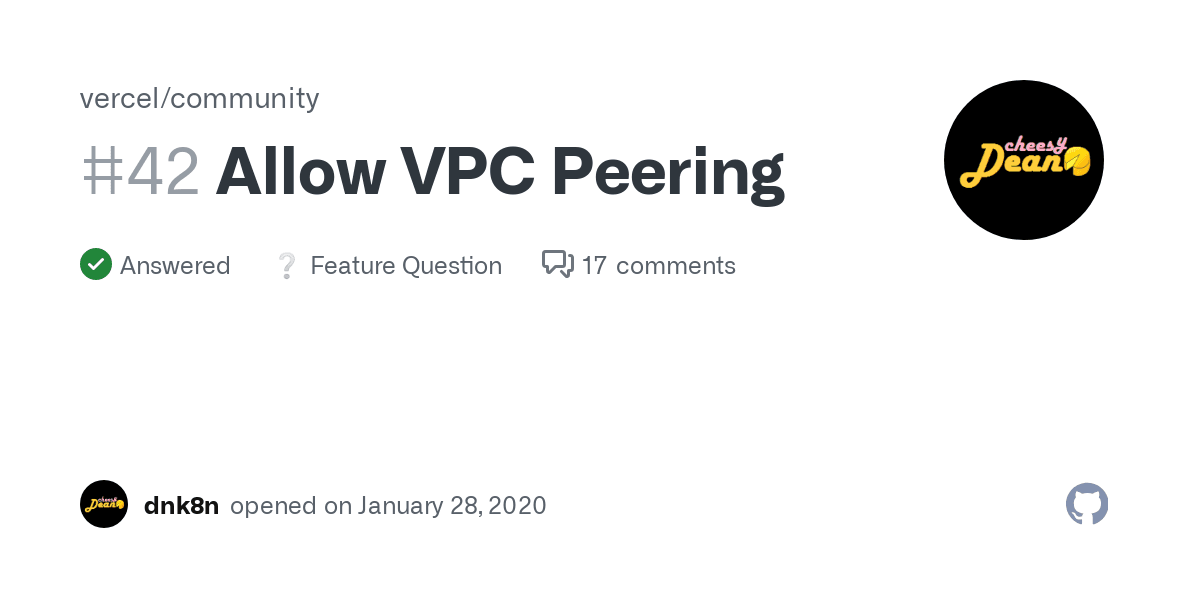

Currently, Vercel does not support inter-instance access through VPC networks, and services can only access each other through the public network, which will result in certain performance losses. The security mechanism also needs to be ensured by the developers themselves. For more information, you can refer to the following issue:

Scheduled Tasks¶

Due to the fact that cloud functions cannot run persistently, it is impossible to schedule timed tasks within the code. Currently, Vercel does not natively support the CronJob function. However, we can accomplish CronJob through Github Action. All we need to do is to configure timed tasks in Github Action, with the task content being to call a specific cloud function interface at regular intervals.

on:

schedule:

- cron: '0 * * * *'

jobs:

build:

runs-on: ubuntu-latest

steps:

- run: curl -X GET https://example.vercel.app/api/cronjobLog Monitoring¶

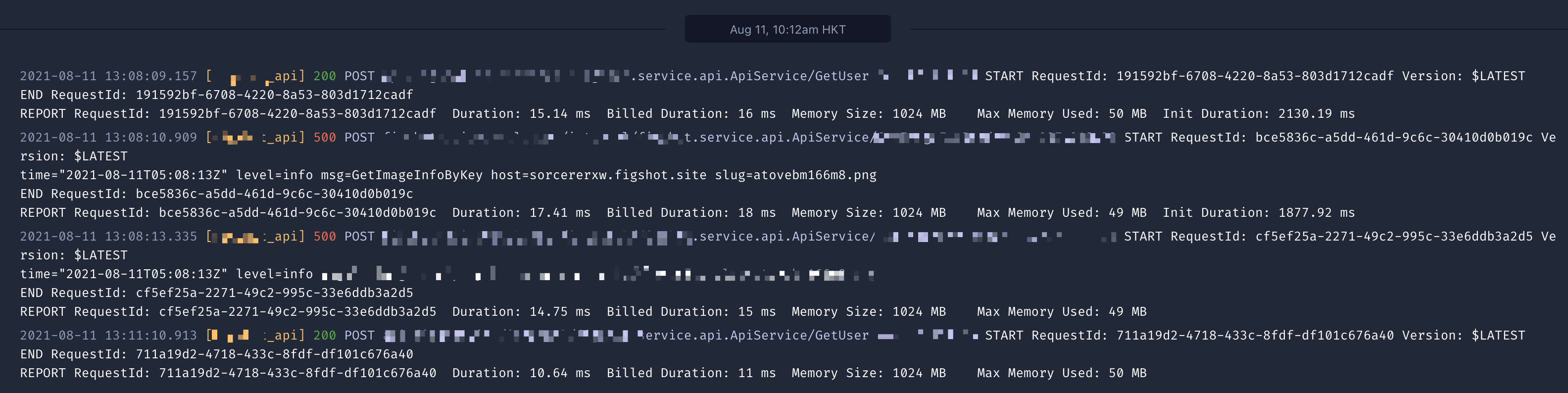

Service monitoring involves many aspects, but the most basic one is runtime logs. Vercel does not retain all runtime logs, it only outputs logs after Log Drain is enabled. If you want to monitor logs for a long time, you need to use a third-party service to actively read Vercel's Log Drain.

I chose the Logtail service from BetterStack, which can be integrated into all Vercel projects with one click. With a free quota of 1GB/month and 3 days of retention, it is sufficient for initial small projects.

Database¶

Under normal circumstances, we need to establish a long TCP connection to connect to databases (such as MySQL/MongoDB), which is high-cost and unnecessary for one-time cloud functions. Therefore, there are quite a few Cloud Database services on the market now, which expose Restful RPC to users to operate the database, avoiding direct connection with the database, and speeding up the startup of cloud functions.

My service mainly uses MySQL for storage, and I have chosen the service of PlanetScale. Using their encapsulated MySQL Driver can seamlessly integrate with the native sql component.

import (

"database/sql"

"github.com/go-sql-driver/mysql"

"github.com/planetscale/planetscale-go/planetscale"

"github.com/planetscale/planetscale-go/planetscale/dbutil"

)

func NewDB() (*sql.DB, error) {

client, err := planetscale.NewClient(

planetscale.WithServiceToken(TokenName, Token),

)

if err != nil {

return nil, err

}

mysqlConfig := mysql.NewConfig()

mysqlConfig.ParseTime = true

sqldb, err = dbutil.Dial(context.Background(), &dbutil.DialConfig{

Organization: Org,

Database: DB,

Branch: Branch,

Client: client,

MySQLConfig: mysqlConfig,

})

if err != nil {

return nil, err

}

return sqldb

}In addition to a good interface, PlanetScale also provides multi-branch management for MySQL schema. You can upgrade the table structure in the development branch, and after everything is normal, synchronize the changes in the development branch to the production branch all at once. Overall, PlanetScale is very handy to use.

Integrations¶

Vercel recently launched the integration market, selecting a number of external services suitable for use on cloud functions, which greatly fills many gaps. Just as mentioned in Unbundling AWS, every feature in AWS can become a standalone Saas service, while Vercel focuses on optimizing the runtime of cloud functions, with other components provided by more professional services.

Summary¶

Compared to building your own K8S cluster or AWS lambda, deploying services as cloud functions on Vercel can significantly reduce maintenance costs (either monetary or time-wise). However, as can be seen above, the features provided by Vercel itself are limited, and the vast majority of the infrastructure still relies on third-party services. Each additional third-party service introduced may potentially decrease the system's reliability. Nevertheless, for personal projects, this is acceptable, and more services will be deployed on Vercel in the future.

Reference Link¶